Ch14 结构嵌套模型的G估计¶

内容摘要¶

在前两章中,我们描述了IP权重和标准化,以估计戒烟对体重增加的平均因果关系。在本章中,我们描述了估计平均因果效应的第三种方法:g估计。我们使用相同的NHEFS观测数据,并提供简单的计算机代码来进行分析。

IP加权,标准化和g估计通常统称为g方法,because they are designed for application to generalized treatment contrasts involving treatments that vary over time. 本书第二部分中将g方法应用于不会随时间变化的治疗方法 may then be overkill since there are alternative, simpler approaches. 但是,通过在相对简单的环境中展示g方法,我们可以专注于 their main features,同时避免了第三部分中描述的更复杂的问题。

IP加权和标准化在第2章中进行了介绍,然后是在第12章和第13章。In contrast, 我们需要等到 Part II 来描述g估计。原因是:describing g-estimation is facilitated by the specification of a structural model, even if the model is saturated.

通过g估计来估计其参数的模型称为结构嵌套模型(structural nested models)。这三种g方法基于不同的建模假设。

FAQ:

\(G\) 估计只能用于调整混淆,不能用于选择偏差? 而 IP Weighting and standardization 可以用于 confounding and selection bias

14.1 The causal question revisited¶

估计量:ACE of smoking cessation \(A\) on weight gain \(Y\) in each strata defined by the covariates \(L\)

如前几章所述, we restricted the analysis to NHEFS individuals with known sex, age, race, weight, height, education, alcohol use and intensity of smoking at the baseline (1971-75) and followup (1982) visits, and who answered the medical history questionnaire at baseline.

在上两章中,我们应用了IP加权和标准化来估计戒烟(治疗)对体重增加(结果)的平均因果效应。We assumed that exchangeability of the treated and the untreated was achieved conditional on the \(L\) variables: sex, age, race, education, intensity and duration of smoking, physical activity in daily life, recreational exercise, and weight.We defined the average causal effect on the difference scale as \(E[Y^{ a=1,c=0}]−E[Y^{ a=0,c=0}]\), that is, the difference in mean weight that would have been observed if everybody had been treated and uncensored compared with untreated and uncensored.

The quantity \(E[Y^{ a=1,c=0}]−E[Y^{ a=0,c=0}]\) measures the average causal effect in the entire population. 但是有时候,人们可能会对因果效应 in a subset of the population 感兴趣。For example, one may want to estimate the average causal effect in women, in individuals aged 45, in those with low educational level, etc.

在本章中,我们将使用g估计来估计 ACE of smoking cessation \(A\) on weight gain \(Y\) in each strata defined by the covariates \(L\). This conditional effect is represented by \(E[Y^{a, c=0}|L]−E[Y^{a=0, c=0}|L]\) 在描述g估计之前,我们将介绍 structural nested models and rank preservation, and, 在下一节中,将以新的方式阐明给定 \(L\) 的可交换性条件。

14.2 Exchangeability revisited¶

As a reminder, 条件可交换性意味着 in any subset of the study population in which all individuals have the same values of \(L\), those who did not quit smoking \((A = 0)\) would have had the same mean weight gain as those who did quit smoking \((A = 1)\) if they had not quit, and vice versa.

理解 \(g\)-估计需要两个方面:

理解模型 \(logit P(A=1|Y^{a=0}, L) = \alpha_0 + \alpha_1 Y^{a=0} + \alpha_2 L\) 中系数 \(\alpha_1\) 应该是多少?

the structural model

14.3 Structural nested mean models¶

Our structural model for the conditional causal effect would be \(E[Y^a − Y^{ a=0}|L] = \beta_1 a\). 更一般而言,可能会有effect modification. 例如,在重度吸烟者中戒烟的因果效应可能比在轻度吸烟者中更大。 To allow for the causal effect to depend on \(L\) we can add a product term to the structural model, i.e., \(E[Y^a − Y^{ a=0}| L] = \beta_1 a + \beta_2 a L\), where \(\beta_2\) is a vector of parameters. Under conditional exchangeability \(Y^a \perp A | L\), 治疗和未治疗的条件因果效应相同 because the treated and the untreated are, on average, the same type of people within levels of \(L\). 因此,在可交换性下,结构模型也可以写成

which is referred to as a structural nested mean model. 边际结构模型与结构嵌套模型之间的关系是:

It leaves the mean counterfactual outcomes under no treatment \(E[Y^{a=0}|L]\) completely unspecified.

Estimating this difference requires adjustment for (两种偏差)both confounding and selection bias (due to censoring \(C = 1\)) for the effect of treatment \(A\). As described in the previous two chapters, IP weighting and standardization can be used to adjust for these two biases. 如前两章所述,IP加权和标准化可用于针对这两个偏差进行调整。另一方面,\(G\) 估计只能用于调整混淆,不能用于选择偏差。

现在,我们将注意力转移到 rank preservation 的概念上,这将有助于我们描述结构嵌套模型的 g 估计。

14.4 Rank preservation¶

We would then have two lists of individuals ordered from larger to smaller value of the corresponding counterfactual outcome. If both lists are in identical order we say that there is rank preservation.

然后,我们将有 two lists of individuals,从相应的反事实结果按从大到小的顺序排序。如果两个列表的顺序相同,我们就说有 rank preservation。

我们没有理由要在实践中使用这种不切实际的 rank-preserving model 模型。但是,我们将在下一节中使用它来介绍g估计,因为对于秩排序模型,g估计更容易理解,并且对于秩排序和非秩排序模型,g 估计过程实际上是相同的。

14.5 G-estimation¶

This section links the material in the previous three sections. 我们的目标是 is estimating the parameters of the structural nested mean model \(E[Y^a − Y^{ a=0}| L, A=a] = \beta_1 a\). For simplicity, we first consider a model with a single parameter \(\beta_1\).

We also assume that the additive rank-preserving model \(Y_i^a - Y_i^{a=0} = \psi_1 a\) is correctly specified for all individuals \(i\). 因为所有个体的模型都是相同的,we write the model in the equivalent form

g估计的第一步是将模型链接到观测数据,Therefore, if we replace the fixed value \(a\) in the structural model by each individual’s value \(A\)–which will be 1 for some and 0 for others–then we can replace the counterfactual outcome \(Y^a\) by the individual’s observed outcome \(Y^A = Y\). 于是

14.6 Structural nested models with two or more parameters¶

We have so far considered a structural nested mean model with a single parameter, 但是我们需要交互项。

Fortunately, the g-estimation procedure described in the previous section can be generalized to models with product terms.

此时,我们的优化问题有所不同。

In the more general case, we would consider a model that allows the average causal effect of smoking cessation to vary across all strata of the variables in \(L\). 但是实际上,很少使用具有多个参数的结构嵌套模型。

Programs¶

书籍中例子代码

[1]:

## Setup and imports

%matplotlib inline

import warnings

warnings.filterwarnings('ignore')

from collections import namedtuple

import numpy as np

import pandas as pd

import statsmodels.api as sm

import scipy.stats

import scipy.optimize

import matplotlib.pyplot as plt

nhefs_all = pd.read_excel('NHEFS.xls')

nhefs_all.shape

WARNING *** OLE2 inconsistency: SSCS size is 0 but SSAT size is non-zero

[1]:

(1629, 64)

Add a variable for censored weight, then add'constant', dummy variables, and squared variables, as done in previous chapters

[2]:

nhefs_all['censored'] = nhefs_all.wt82.isnull().astype('int')

nhefs_all['constant'] = 1

edu_dummies = pd.get_dummies(nhefs_all.education, prefix='edu')

exercise_dummies = pd.get_dummies(nhefs_all.exercise, prefix='exercise')

active_dummies = pd.get_dummies(nhefs_all.active, prefix='active')

nhefs_all = pd.concat(

[nhefs_all, edu_dummies, exercise_dummies, active_dummies],

axis=1

)

for col in ['age', 'wt71', 'smokeintensity', 'smokeyrs']:

nhefs_all['{}^2'.format(col)] = nhefs_all[col] * nhefs_all[col]

Subset the data as described in the margin, pg 149.

[3]:

restriction_cols = [

'sex', 'age', 'race', 'wt82', 'ht', 'school', 'alcoholpy', 'smokeintensity'

]

missing = nhefs_all[restriction_cols].isnull().any(axis=1)

nhefs = nhefs_all.loc[~missing]

nhefs.shape

[3]:

(1566, 81)

现在,我们将注意力转移到 rank preservation 的概念上,这将有助于我们描述结构嵌套模型的 g 估计。

Program 14.1¶

“In our smoking cessation example, we will use the nonstabilized IP weights \(W^C = 1 \, / \, \Pr[C = 0|L,A]\) that we estimated in Chapter 12. Again we assume that the vector of variables \(L\) is sufficient to adjust for both confounding and selection bias.” pg 174

[4]:

X_ip = nhefs_all[[

'constant',

'sex', 'race', 'age', 'age^2', 'edu_2', 'edu_3', 'edu_4', 'edu_5',

'smokeintensity', 'smokeintensity^2', 'smokeyrs', 'smokeyrs^2',

'exercise_1', 'exercise_2', 'active_1', 'active_2', 'wt71', 'wt71^2',

'qsmk'

]]

We can reuse a function from chapter 12 to help us create IP weights

[5]:

def logit_ip_f(y, X):

"""

Create the f(y|X) part of IP weights

from logistic regression

Parameters

----------

y : Pandas Series

X : Pandas DataFrame

Returns

-------

Numpy array of IP weights

"""

model = sm.Logit(y, X)

res = model.fit()

weights = np.zeros(X.shape[0])

weights[y == 1] = res.predict(X.loc[y == 1])

weights[y == 0] = 1 - res.predict(X.loc[y == 0])

return weights

[6]:

weights = 1 / logit_ip_f(nhefs_all.censored, X_ip)

Optimization terminated successfully.

Current function value: 0.142836

Iterations 8

[7]:

ip_censor = weights[nhefs_all.censored == 0]

[8]:

print(' min mean max')

print('------------------------')

print('{:>6.2f} {:>6.2f} {:>6.2f}'.format(

ip_censor.min(),

ip_censor.mean(),

ip_censor.max()

))

min mean max

------------------------

1.00 1.04 1.82

Still Program 14.1

“all individuals can be ranked according to the value of their observed outcome Y”

[9]:

ranked = nhefs.sort_values('wt82_71', ascending=False)

[10]:

ranked[['seqn', 'wt82_71']][:2]

[10]:

| seqn | wt82_71 | |

|---|---|---|

| 1366 | 23522 | 48.538386 |

| 259 | 6928 | 47.511303 |

[11]:

ranked[['seqn', 'wt82_71']][-1:]

[11]:

| seqn | wt82_71 | |

|---|---|---|

| 1328 | 23321 | -41.28047 |

Program 14.2¶

“In our smoking cessation example, we first computed each individual’s value of the 31 candidates …”

We’re going to need a few different things from the regressions, wo we’ll first create a container that holds that information together.

We’ll want 1. absolute value of the coefficient (the basis of comparison), 2. the value of the coefficient 3. the value of psi that produced the coefficient, and 4. the p-value (for finding the 95% confidence interval)

[12]:

GInfo = namedtuple('GInfo', ['abs_alpha', 'alpha', 'psi', 'pvalue'])

Now we’ll create a function that will perform the regression and return the info we need

[13]:

def logit_g_info(psi, data, y, X_cols, weights):

"""

Return logistic regression parameters to identify best `psi`

Note: this is written specifically for the problem in this program

Paramters

---------

psi : float

data : Pandas DataFrame

needs to contain the given `X_cols`

y : Pandas Series or Numpy array

X_cols : list of strings

column names for `X`

weights : Pandas Series or Numpy array

Returns

-------

GInfo namedtuple, containing

- absolute value of H_of_psi coefficient

- H_of_psi coefficient

- psi value

- p-value for H_of_psi coefficient

"""

data['H_of_psi'] = data.wt82_71 - psi * data.qsmk

X = data[X_cols]

gee = sm.GEE(y, X, groups=data.seqn, weights=weights, family=sm.families.Binomial())

res = gee.fit()

alpha = res.params.H_of_psi

pvalue = res.pvalues.H_of_psi

return GInfo(abs(alpha), alpha, psi, pvalue)

For all uses here, y and the X columns are the same.

[14]:

y = nhefs.qsmk

X_cols = [

'constant',

'sex', 'race', 'age', 'age^2', 'edu_2', 'edu_3', 'edu_4', 'edu_5',

'smokeintensity', 'smokeintensity^2', 'smokeyrs', 'smokeyrs^2',

'exercise_1', 'exercise_2', 'active_1', 'active_2', 'wt71', 'wt71^2',

'H_of_psi'

]

We’ll run the regression once for the known right answer

[15]:

g_info = logit_g_info(3.446, nhefs, y, X_cols, weights=ip_censor)

[16]:

print('psi: {} regression coefficient: {:>0.2g}'.format(g_info.psi, g_info.alpha))

psi: 3.446 regression coefficient: -1.9e-06

Now we’ll do the course-grained search for \(\psi\) with \(H(2.0), H(2.1), H(2.2), \ldots , H(4.9)\) and \(H(5.0)\) (pg 178)

[17]:

psi_vals = np.arange(2.0, 5.0, 0.1)

[18]:

g_info = [

logit_g_info(psi, nhefs, nhefs.qsmk, X_cols, ip_censor)

for psi in psi_vals

]

[19]:

# by default, `min` will minimize the first value,

# which in this case is the absolute value of the coefficient

best = min(g_info)

[20]:

print('best psi: {:>0.4f} best alpha: {:>0.5f}'.format(best.psi, best.alpha))

best psi: 3.4000 best alpha: 0.00086

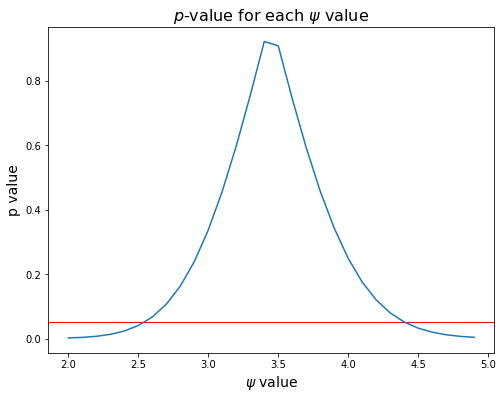

The plot below shows \(p\)-value as a function of \(\psi\), with a red line at 0.05.

To find the 95% confidence interval, we find the last values that are below the line, coming from the left and the right.

[21]:

fig, ax = plt.subplots(figsize=(8, 6))

ax.axhline(0.05, c='r', linewidth=1)

ax.plot(psi_vals, [g.pvalue for g in g_info])

ax.set_xlabel('$\psi$ value', fontsize=14)

ax.set_ylabel('p value', fontsize=14)

ax.set_title('$p$-value for each $\psi$ value', fontsize=16);

[22]:

cutoff = 0.05

ci_lo = max([g.psi for g in g_info[:len(g_info)//2] if g.pvalue < cutoff])

ci_hi = min([g.psi for g in g_info[len(g_info)//2:] if g.pvalue < cutoff])

print('95% confidence interval: ({:>0.2f}, {:>0.2f})'.format(ci_lo, ci_hi))

95% confidence interval: (2.50, 4.50)

We can run a finer search between 3.4 and 3.5, with steps of 0.001. That can be done using the same steps as above, but using

psi_vals = np.arange(3.4, 3.5, 0.001)

Here, we’ll use automatic function optimization to find a more exact \(\psi\).

The second cell below can take a while to run.

[23]:

def just_abs_alpha(psi):

g_info = logit_g_info(psi, nhefs, nhefs.qsmk, X_cols, ip_censor)

return g_info.abs_alpha

[24]:

scipy.optimize.minimize(

fun=just_abs_alpha,

x0=4.0

)

[24]:

fun: 1.2423094722888098e-10

hess_inv: array([[3.96249031e-07]])

jac: array([0.00212878])

message: 'Desired error not necessarily achieved due to precision loss.'

nfev: 270

nit: 2

njev: 86

status: 2

success: False

x: array([3.4458988])

The x value in the output is the estimated \(\psi\), which rounds to 3.446, as expected

Program 14.3¶

We can solve for \(\psi\) directly. From Technical Point 14.2, we have

where the sum is over the uncensored observations, \(W^C\) is the IP weights, \(Y\) is wt82_71, \(A\) is qsmk, and \(\mathrm{E}[A|L_i]\) is the predicted qsmk from the model below.

[25]:

A = nhefs.qsmk

X = nhefs[[

'constant',

'sex', 'race', 'age', 'age^2', 'edu_2', 'edu_3', 'edu_4', 'edu_5',

'smokeintensity', 'smokeintensity^2', 'smokeyrs', 'smokeyrs^2',

'exercise_1', 'exercise_2', 'active_1', 'active_2', 'wt71', 'wt71^2',

]]

[26]:

glm = sm.GLM(

A,

X,

freq_weights=ip_censor,

family=sm.families.Binomial()

)

res = glm.fit()

[27]:

res.summary().tables[1]

[27]:

| coef | std err | z | P>|z| | [0.025 | 0.975] | |

|---|---|---|---|---|---|---|

| constant | -2.4029 | 1.314 | -1.829 | 0.067 | -4.978 | 0.172 |

| sex | -0.5137 | 0.150 | -3.422 | 0.001 | -0.808 | -0.219 |

| race | -0.8609 | 0.206 | -4.178 | 0.000 | -1.265 | -0.457 |

| age | 0.1152 | 0.049 | 2.328 | 0.020 | 0.018 | 0.212 |

| age^2 | -0.0008 | 0.001 | -1.478 | 0.140 | -0.002 | 0.000 |

| edu_2 | -0.0289 | 0.193 | -0.150 | 0.881 | -0.407 | 0.349 |

| edu_3 | 0.0877 | 0.173 | 0.507 | 0.612 | -0.252 | 0.427 |

| edu_4 | 0.0664 | 0.266 | 0.249 | 0.803 | -0.455 | 0.588 |

| edu_5 | 0.4711 | 0.221 | 2.130 | 0.033 | 0.038 | 0.904 |

| smokeintensity | -0.0783 | 0.015 | -5.271 | 0.000 | -0.107 | -0.049 |

| smokeintensity^2 | 0.0011 | 0.000 | 3.854 | 0.000 | 0.001 | 0.002 |

| smokeyrs | -0.0711 | 0.027 | -2.627 | 0.009 | -0.124 | -0.018 |

| smokeyrs^2 | 0.0008 | 0.000 | 1.830 | 0.067 | -5.78e-05 | 0.002 |

| exercise_1 | 0.3363 | 0.175 | 1.921 | 0.055 | -0.007 | 0.679 |

| exercise_2 | 0.3800 | 0.182 | 2.093 | 0.036 | 0.024 | 0.736 |

| active_1 | 0.0341 | 0.130 | 0.262 | 0.793 | -0.221 | 0.289 |

| active_2 | 0.2135 | 0.206 | 1.036 | 0.300 | -0.190 | 0.617 |

| wt71 | -0.0077 | 0.025 | -0.312 | 0.755 | -0.056 | 0.041 |

| wt71^2 | 8.655e-05 | 0.000 | 0.574 | 0.566 | -0.000 | 0.000 |

Using the equation at the top of this section, \(\hat{\psi}\) is calculated as below

[28]:

A_pred = res.predict(X)

Y = nhefs.wt82_71

estimate = (

(ip_censor * Y * (A - A_pred)).sum() /

(ip_censor * A * (A - A_pred)).sum()

)

[29]:

estimate

[29]:

3.4458988033695004

“If \(\psi\) is D-dimensional…”

The following is a direct translation of what’s in the R and Stata code examples

[30]:

diff = A - A_pred

diff2 = ip_censor * diff

[31]:

lhs = np.array([

[

(A * diff2).sum(),

(A * nhefs.smokeintensity * diff2).sum()

],

[

(A * nhefs.smokeintensity * diff2).sum(),

(A * nhefs.smokeintensity**2 * diff2).sum()

]

])

lhs

[31]:

array([[ 292.07618742, 5701.54685801],

[ 5701.54685801, 153044.85432632]])

[32]:

rhs = np.array([

[(Y * diff2).sum()],

[(Y * nhefs.smokeintensity * diff2).sum()]

])

rhs

[32]:

array([[ 1006.46498471],

[20901.06799989]])

[33]:

psi = np.linalg.solve(lhs,rhs)

psi

[33]:

array([[2.85947039],

[0.03004128]])