Judea Pearl¶

本文主要讲讲 the book of why, Judea Pearl, Pearl2009 causality 和其他的几篇论文。

You can suggest and comment here

接受采访聊 AGI¶

2019, 12/11 Judea Pearl: Causal Reasoning, Counterfactuals, Bayesian Networks, and the Path to AGI | AI Podcast

[1]:

from IPython.display import YouTubeVideo

YouTubeVideo('pEBI0vF45ic')

[1]:

采访金句和核心观点¶

Everything starts with the question: What is the research question?

Science has not provided us with the mathematics to capture the idea of x causes y and y does not cause x. Because all the question of physics are symmetrical, algebraic. The equality sign goes both ways.

We start by asking ourselves question: what are the factors that would determine the value of x?

AI slogan is presentation first, discovery second.

They need the human expert to specify the initial model. Initial model could be very qualitative. Just who listens to whom? By whom listens I mean one variable listens to the other.

it’s a difficult question because it’s: find the cause from effect. The easy one is find effect from cause.

I’m working only in the abstract. The abstract is knowledge in, knowledge out, data in between.

All our life, all our intelligence, is built around metaphors, mapping from the unfamiliar to the familiar, but the marriage between the two is a tough thing, which we haven’t yet been able to algorithmatize.

All do-calculus is logical relationships, a powerful generalization of boolean logic.

The end goal is going to be a machine that can answer sophisticated questions: counterfactuals, regret, compassion, responsibility, and free will.

For me, consciousness is having a blueprint of your software.

关于专家系统 with causation。You have to start with some knowledge, and you’re trying to enrich it. But you don’t enrich it by asking for more rules. You enrich it by asking for the data. To look at the data, and quantifying, and ask queries that you couldn’t answer when you started. You couldn’t because the question is quite complex, and it’s not within the capability of ordinary cognition, of ordinary person, ordinary expert even, to answer

关于因果连接多少的影响。 The more arrow you add, the less likely you are to identify things from purely observational data. So if the whole world is bushy, and everybody effect everybody else, the answer is-you can answer it ahead of time. I cannot answer my query from observational data. I have to go to experiments(因果连接越多越不可预测).

关于反事实的解释和意义。You have a clash, a logical clash, that cannot exist together. That’s counterfactual, and that is the source of our explanation of the idea of responsibility, regret, and free will.

婴儿如何建立因果模型。 That’s another question: How do the babies come out with the counterfactual model of the world? And babies do that. They know how to play in the crib. They know which balls hits another one, and they learn it by playful manipulation of the world. Their simple world involves all these toys and balls and chimes (laughs) but if you think about it, it’s a complex world. [Lex]We take for granted how complicated– And the kids do it by playful manipulation, plus parent guidance, peer wisdom, and hearsay. They meet each other, and they say, “You shouldn’t have taken my toy.”

(如何构建因果图?)Now, can we take a leap to trying to learn, when it’s not 20 variables but 20 million variables, trying to learn causation in this world. Not learn, but somehow construct models. I mean, it seems like you would only have to be able to learn, because constructing it manually would be too difficult. Do you have ideas of– I think it’s a matter of combining simple models from many, many sources, from many, many disciplines. And many metaphors. Metaphors is an expert system. It’s mapping problem with which you are not familiar, to a problem with which you are familiar. All our life, all our intelligence, is built around metaphors, mapping from the unfamiliar to the familiar, but the marriage between the two is a tough thing, which we haven’t yet been able to algorithmatize.

(因果和自由意志,意识等)I have a blueprint of myself, though, not a full detail, though, because I cannot get the whole thing problem, but I have a blueprint. So at that level of a blueprint, I can modify things. I can look at myself in the mirror and say, “Hmm, if I tweak this model,”I’m going to perform differently.” That is what we mean by free will. For me, consciousness is having a blueprint of your software.

(墓志铭怎么写?)I already have a tombstone carved. (both laugh) Oh, boy. The fundamental law of counterfactuals. That’s what it-it’s a simple equation. Put a counterfactual in terms of a model surgery. That’s it, because everything follows from there. If you get that, all the rest. I can die in peace, and my student can derive all my knowledge by mathematical means. The rest follows.

字幕文档¶

The following is a conversion with Judea Pearl, professor at UCLA and a winner of the Turing Award, that’s generally recognized as the Nobel Prize of computing. He’s one of the seminal figures in the field of artificial intelligence, computer science, and statistics. He has developed and championed probabilistic approaches to AI, including Bayesian networks, and profound ideas in causality in general. These ideas are important not just to AI, but to our understanding and practice of science.

以下是与加州大学洛杉矶分校(UCLA)教授朱德亚·珀尔(Judea Pearl)的访谈,他是图灵奖的获得者,该奖通常被公认为诺贝尔计算奖。他是人工智能,计算机科学和统计领域的重要人物之一。他开发并倡导了AI的概率方法,包括贝叶斯网络,以及因果关系方面的深刻思想。这些想法不仅对人工智能重要,而且对我们对科学的理解和实践也很重要。

但是在AI领域中,因果的概念,因果关系许多人来说,是当前缺失的内容以及必须构建,才能构建真正的智能系统的核心。由于这个原因,以及其他许多原因,他的工作值得经常回顾。我推荐他最近出版的《为什么》一书,以公众可以理解的方式介绍 Pearl 因果推理的主要思想。This is the “Artificial Intelligence Podcast.” If you enjoy it, subscribe on YouTube, give it five stars on Apple Podcast, support on Patreon, or simply connect with me on Twitter @lexfridman, spelled F-R-I-D-M-A-N.

现在,这是我与Judea Pearl的访谈。

Q1. (您在一次采访中提到,科学不是事实的集合,而是人类与自然之谜之间不断的斗争。您能想起的第一个奥秘是什么引起了您的好奇心?)You mentioned in an interview that science is not a collection of facts, but a constant human struggle with the mysteries of nature. What was the first mystery that you can recall that hooked you that captivated your curiosity?

Oh, the first mystery. That’s a good one. Yeah, I remember that. I had a fever for three days when I learned about Descartes and a little geometry, and I found out that you can do all the construction in geometry using algebra. And I couldn’t get over it. I simply couldn’t get out of bed. (chuckles)

[Lex] (解析几何解锁什么样的世界)What kinda world does analytic geometry unlock?

Well, it connects algebra with geometry, okay? So, Descartes has the idea that geometrical construction and geometrical theorems and assumptions can be articulated in the language of algebra. Which means that all the proofs that we did in high school in trying to prove that the three bisectors meet at one point, and that the (chuckles) All this can be proven by shuffling around notation. That was a traumatic experience.

[Judea] For me, it was, it was, I’m telling you, right? [Lex] So it’s the connection between the different mathematical disciplines, that they all They’re not even two different languages.

[Lex] (哪个数学学科最美丽?几何吗?)Which mathematic discipline is most beautiful? Is geometry it for you? [Judea] Both are beautiful. They have almost the same power. [Lex] But there’s a visual element to geometry. [Judea] The visual element, it’s more transparent. But once you get over to algebra then linear equations is a straight line. This translation is easily absorbed. To pass a tangent to a circle, you know, you have the basic theorems, and you can do it with algebra. But the transition from one to another was really, I thought that Descartes was the greatest mathematician of all times.

Q2. (Pearl从数学到工程)So, if you think of engineering and mathematics as a spectrum. You have walked casionally along this spectrum throughout your life. You know, a little bit of engineering and then you’ve done a little bit of mathematics here and there.

[Judea]A little bit. We get a very solid background in mathematics because our teachers were geniuses. Our teachers came from Germany in the 1930s running away from Hitler. They left their careers in Heidelberg and Berlin, and came to teach high school in Israel. And we were the beneficiary of that experiment. When they taught us math, a good way.

(教数学的好方法是什么?)What’s a good way to teach math? [Judea] Theorologically. (那些人?)The people. The people behind the theorems, yeah. Their cousins, and their nieces, (chuckles) and their faces, and how they jumped from the bathtub when they screamed, “Eureka” and ran naked in town. (因此,您几乎受过数学史学家的教育?)

(因此,您几乎受过数学史学家的教育。)So you were almost educated as a historian of math. No, we just got a glimpse of that history, together with the theorem, so every exercise in math was connected with a person, and the time of the person, the period. (这个时期从数学上来讲也是。)[Lex]The period also mathematically speaking. Mathematically speaking, yes, not a paradox. (然后在大学里,您继续从事工程学。)Then in university, you had gone on to do engineering. Yeah. I got a BS in Engineering at Technion. And then I moved here for graduate school work, and I did the engineering in addition to physics in Rutgers. And it combined very nicely with my thesis, which I did in Elsevier Laboratories in superconductivity.

Q3. (数学和工程谁更强大?您的看法?)And then somehow thought to switch to almost computer science software, even, not switched, but longed to become to get into software engineering a little bit, almost in programming, if you can call it that in the 70s. There’s all these disciplines. Yeah. If you were to pick a favorite, in terms of engineering and mathematics, which path do you think has more beauty? Which path has more power?

It’s hard to choose, no? I enjoy doing physics. I even have a vortex named with my name. So, I have investment in immortality. (laughs) So, what is a vortex? Vortex is in superconductivity. In the superconductivity. You have terminal current swirling around, one way or the other, going to have us throw one or zero, for computer that was we worked on in the 1960 in Elsevier, and I discovered a few nice phenomena with the vortices. You push current and they move. [Lex] So there’s a Pearl vortex. A Pearl vortex, why, you can google it. (both laugh) I didn’t know about it, but the physicist picked up on my thesis, on my PhD thesis, and it became popular when thin film superconductors became important, for high temperature superconductors. So, they call it “Pearl vortex” without my knowledge. (laughs) I discovered it only about 15 years ago.

Q4. (决定论还是非决定论?)You have footprints in all of the sciences, so let’s talk about the universe for a little bit. Is the universe, at the lowest level, deterministic or stochastic, in your amateur philosophy view? Put another way, does God play dice?

We know it is stochastic, right? [Lex] Today. Today we think it is stochastic. Yes, we think because we have the Heisenberg uncertainty principle and we have some experiments to confirm that.

(我们所要做的只是实验以确认这一点。我们不明白为什么。)All we have is experiments to confirm it. We don’t understand why. [Judea] Why is already– [Lex]You wrote a book about why. (laughs) Yeah, it’s a puzzle. It’s a puzzle that you have the dice-flipping machine, or God, and the result of the flipping, propagated with a speed faster than the speed of light. (laughs) We can’t explain it, okay? But, it only governs microscopic phenomena. (因此,您认为量子力学对理解现实的本质没有帮助吗?)So you don’t think of quantum mechanics as useful for understanding the nature of reality? [Judea] No, it’s diversionary. (因此,在您的思维中,世界可能是确定性的?)So, in your thinking, the world might as well be deterministic? [Judea]The world is deterministic, and as far as a neural firing is concerned, it is deterministic to first approximation.

Q5. (自由意志呢?)What about free will?

Free will is also a nice exercise. (自由意志是我们AI人将要解决的一种幻想。)Free will is an illusion, that we AI people are going to solve. (那么,一旦我们解决了,您认为解会是什么样子?)So, what do you think, once we solve it, that solution will look like? Once we put it in the page. [Judea] The solution will look like, first of all it will look like a machine. A machine that acts as though it has free will. It communicates with other machines as though they have free will, and you wouldn’t be able to tell the difference between a machine that does and a machine that doesn’t have free will, eh? (因此,它在其他机器之间传播了自由意志的幻想。)So it propagates the illusion of free will amongst the other machines. And faking it is having it, okay? That’s what Turing test is all about. Faking intelligence is intelligence, because it’s not easy to fake. It’s very hard to fake, and you can only fake if you have it. (真是一个有趣的论断哈) That’s such a beautiful statement. (laughs) You can’t fake it if you don’t have it, yup.

Q6. (对概率的理解)So, let’s begin at the beginning, with the probability, both philosophically and mathematically, what does it mean to say the probability of something happening is 50%? What is probability?

It’s a degree of uncertainty that an agent has about the world. (您在该陈述中表达了一些知识。)You’re expressing some knowledge in that statement. Of course. If the probability is 90%, it’s absolutely different kind of knowledge than if it is 10%. (但这还不是扎实的知识,他是…)But it’s still not solid knowledge, it’s– It is solid knowledge, by. If you tell me that 90% assurance smoking will give you lung cancer in five years, versus 10%, it’s a piece of useful knowledge.

(那么这个关于宇宙的概率视角为什么有用呢?如果我们 deterministic 的观点化)So this statistical view of the universe, why is it useful? So we’re swimming in complete uncertainty. Most of everything around you– It allows you to predict things with a certain probability, and computing those probabilities are very useful. That’s the whole idea of prediction. And you need prediction to be able to survive. If you cannot predict the future then you just, crossing the street would be extremely fearful.

Q7. (相关性和因果)And so you’ve done a lot of work in causation, so let’s think about correlation. I started with probability. [Lex]You started with probability. You’ve invented the Bayesian networks. [Judea] Yeah. [Lex] And so, we’ll dance back and forth between these levels of uncertainty, but what is correlation? So, probability is something happening, is something, but then there’s a bunch of things happening, and sometimes they happen together sometimes not. They’re independent or not, so how do you think about correlation of things?

Correlation occurs when two things vary together over a very long time, is one way of measuring it. Or, when you have a bunch of variables that they all vary cohesively, then we have a correlation here, and usually when we think about correlation, we really think causation(通常,当我们考虑相关性时,我们实际上是在考虑因果关系). Things cannot be correlation unless there is a reason for them to vary together. Why should they vary together? If they don’t see each other, why should they vary together? (所以最本质的还是因果)So underlying it somewhere is causation. Yes. Hidden in our intuition there is a notion of causation, because we cannot grasp any other logic except causation.

(条件概率与因果关系有何不同?什么是条件概率?)And how does conditional probability differ from causation? So, what is conditional probability?

[Judea] Conditional probability is how things vary when one of them stays the same. Now, staying the same means that I have chosen to look only at those incidents where the guy has the same value as the previous one. It’s my choice, as an experimenter, so things that are not correlated before could become correlated. Like for instance, if I have two coins which are uncorrelated, and I choose only those flippings experiments in which a bell rings, and the bell rings when at least one of them is a tail, okay, then suddenly I see correlation between the two coins, because I only looked at the cases where the bell rang. You see, it is my design. It is my ignorance essentially, with my audacity大胆 to ignore certain incidents, I suddenly create a correlation where it doesn’t exist physically. (您只是解释了用相关性推断的缺点)Right. So, you just outlined one of the flaws of observing the world and trying to infer something from the math about the world from looking at the correlation.

[Judea] I don’t look at it as a flaw. The world works like that. The flaws come if you try to impose causal logic on correlation. It doesn’t work too well.

[Lex]I mean, but that’s exactly what we do. That has been the majority of science, is you–

[Judea] No, the majority of naive science. Statisticians know it. Statisticians know that if you condition on a third variable, then you can destroy or create correlations among two other variables. They know it. It’s (speaks foreign language). There’s nothing surprises them. That’s why they all dismiss the systems paradox, look “Ah, we know it!” They don’t know anything about it(laughs) . (统计学家什么都不知道!)

Q8. (因果和哲学)[Lex] Well, there’s disciplines like psychology, where all the variables are hard to account for, and so, oftentimes there is a leap between correlation to causation. [Judea] What do you mean, a leap? Who is trying to get causation from correlation? There’s no one. [Lex] You’re not proving causation, but you’re sort of discussing it, implying, sort of hypothesizing without ability to– [Judea]Which discipline you have in mind? I’ll tell you if they are obsolete. (Lex laughs) Or if they are outdated, or they’re about to get outdated. Yes, yes. [Judea] Oh, yeah, tell me which ones you have in mind. [Lex] Well, psychology, you know– [Judea] Psychology, what, SEM? [Lex] No, no, I was thinking of applied psychology, studying, for example, we work with human behavior in semi-autonomous vehicles, how people behave. And you have to conduct these studies of people driving cars.

[Judea] Everything starts with the question: What is the research question? What is the research question? The research question: do people fall asleep when the car is driving itself? Do they fall asleep, or do they tend to fall asleep more frequently [Lex] More frequently than the car not driving itself. [Lex] Not driving itself. That’s a good question, okay. You put people in the car, because it’s real world. You can’t conduct an experiment where you control everything. [Judea] Why can’t you con– You could. [Judea] Turn the automatic module on and off. Because there’s aspects to it that’s unethical, because it’s testing on public roads. The drivers themselves have to make that choice themselves, and so they regulate that. So, you just observe when they drive it autonomously, and when they don’t. But maybe they turn it off when they’re very tired. [Lex] Yeah, that kind of thing. But you don’t know those variables. Okay, so you have now uncontrolled experiment.

Uncontrolled experiment. When we call it observation of study, and when we form the correlation detected, we have to infer causal relationship, whether it was the automatic piece that cause them to fall asleep, or, so that is an issue that is about 120 years old. [Lex] (laughs) Yeah. Oh, I should only go 100 years old, okay? [Lex] (chuckles) Who’s counting? Oh, maybe, no, actually I should say it’s 2,000 years old, because we have this experiment by Daniel, about the Babylonian king, that wanted the exiled people from Israel, that were taken in exile to Babylon to serve the king. He wanted to serve them king’s food, which was meat, and Daniel as a good Jew couldn’t eat non-Kosher food, so he asked them to eat vegetarian food. But the king’s overseers said, “I’m sorry,”but if the king sees that your performance falls “below that of other kids, now, he’s going to kill me.” Daniel said, “Let’s make an experiment.”Let’s take four of us from Jerusalem, okay? “Give us vegetarian food.”Let’s take the other guys to eat the king’s food, “and about a week’s time, we’ll test our performance.” And you know the answer, because he did the experiment, and they were so much better than the others, that the kings nominated them to super positions, (laughs) in his case, so it was a first experiment. So that there was a very simple, it’s also the same research questions. We want to know if vegetarian food assists or obstructs your mental ability. So, the question is a very old one. Even Democritus, if I could discover one cause of things, I would rather discuss one cause than be King of Persia. The task of discovering causes was in the mind of ancient people from many, many years ago. But, the mathematics of doing that was only developed in the 1920s. So, science has left us orphaned. Science has not provided us with the mathematics to capture the idea of x causes y and y does not cause x. Because all the question of physics are symmetrical, algebraic. The equality sign goes both ways.

机器学习和因果¶

Q10.(机器学习和因果)Okay, let’s look at machine learning. Machine learning today, if you look at deep neural networks, you can think of it as kind of conditional probability estimators. [Judea] Conditional probability. Correct. Beautiful. Well did you say that Conditional probability estimators. None of the machine learning people clobbered you? (laughs) Attacked you? [Lex]Most people, and this is why today’s conversation I think is interesting is, most people would agree with you. There’s certain aspects that are just effective today, but we’re going to hit a wall, and there’s a lot of ideas, I think you’re very right, that we’re going to have to return to, about causality. Let’s try to explore it. Okay. Let’s even take a step back. You invented Bayesian networks, that look awfully a lot like they express something like causation, but they don’t, not necessarily. So, how do we turn Bayesian networks into expressing causation? How do we build causal networks? A causes B, B causes C. How do we start to infer that kind of thing?

(一些从问题开始)We start by asking ourselves question: what are the factors that would determine the value of x? X could be blood pressure, death, hunger. [Lex] But these are hypotheses that we propose– Hypotheses, everything which has to do with causality comes from a theory. The difference is only how you interrogate the theory that you have in your mind.

Q10.1(因果推理的初始模型)[Lex] So it still needs the human expert to propose– Right. They need the human expert to specify the initial model. Initial model could be very qualitative. Just who listens to whom? By whom listens I mean one variable listens to the other. So, I say okay, the tide is listening to the moon, and not to the rooster crow, okay, and so forth. This is our understanding of the world in which we live, scientific understanding of reality. We have to start there, because if we don’t know how to handle cause and effect relationship, when we do have a model, and we certainly do not know how to handle it when we don’t have a model, so that starts first. An AI slogan is presentation first, discovery second. But, if I give you all the information that you need, can you do anything useful with it? That is the first, representation. How do you represent it? I give you all the knowledge in the world. How do you represent it? When you represent it, I ask you, can you infer x or y or z? Can you answer certain queries? Is it complex? Is it polynomial? All the computer science exercises, we do, once you give me a representation for my knowledge. Then you can ask me, now that I understand how to represent things, how do I discover them? It’s a secondary thing.

Q10.2 (知识表示的问题)[Lex]I should echo the statement that mathematics in much of the machine learning world has not considered causation, that A causes B. Just in anything. That seems like a non-obvious thing that you think we would have really acknowledged it, but we haven’t. So we have to put that on the table. Knowledge, How hard is it to create a knowledge from which to work?

In certain area, it’s easy, because we have only four or five major variables. An epidemiologist or an economist can put them down. The minimum wage, unemployment, policy \(x,y,z\), and start collecting data, and quantify the parameters that were left unquantified, with initial knowledge. That’s the routine work that you find in experimental psychology, in economics, everywhere. In health science, that’s a routine thing. But I should emphasize, you should start with the research question. What do you want to estimate? Once you have that, you have to have a language of expressing what you want to estimate. You think it’s easy? No.

Q11. (专家系统和因果)[Lex] So we can talk about two things, I think. One is how the science of causation is very useful for answering certain questions, and then the other is how do we create intelligent systems that need to reason with causation? So if my research question is how do I pick up this water bottle from the table? All the knowledge that is required to be able to do that, how do we construct that knowledge base? Do we return back to the problem that we didn’t solve in the 80s with expert systems? Do we have to solve that problem, of automated construction of knowledge?

You’re talking about the task of eliciting knowledge from an expert. [Lex] Task of eliciting knowledge from an expert, or self discovery of more knowledge, more and more knowledge. So, automating the building of knowledge as much as possible. [Judea] It’s a different game, in the causal domain, because essentially it is the same thing. You have to start with some knowledge, and you’re trying to enrich it. But you don’t enrich it by asking for more rules. You enrich it by asking for the data. To look at the data, and quantifying, and ask queries that you couldn’t answer when you started. You couldn’t because the question is quite complex, and it’s not within the capability of ordinary cognition, of ordinary person, ordinary expert even, to answer.

Q11.1 [Lex]So what kind of questions do you think we can start to answer? [Judea] Even a simple, I suppose, yeah. (laughs) I start with easy one. [Lex] Let’s do it.

[Judea] Okay, what’s the effect of a drug on recovery? Was it the aspirin that caused my headache to be cured, or was it the television program, or the good news I received? This is already, see, it’s a difficult question because it’s: find the cause from effect. The easy one is find effect from cause.

Q11.2(数学化因果问题, do 的作用)[Lex] That’s right. So first you construct a model saying that this an important research question. This is an important question. Then you– I didn’t construct a model yet. I just said it’s important question. Important question. And the first exercise is, express it mathematically. What do you want to prove? Like, if I tell you what will be the effect of taking this drug? Okay, you have to say that in mathematics. How do you say that? Yes. [Judea] Can you write down the question. Not the answer. I want to find the effect of a drug on my headache. Right. [Judea] Write it down, write it down. That’s where the do-calculus comes in. (laughs) [Judea] Yes. The do-operator, the do-operator. Do-operator, yeah. Which is nice. It’s the difference between association and intervention. Very beautifully sort of constructed. Yeah, so we have a do-operator. So, the do-calculus connected– and the do-operator itself, connects the operation of doing to something that we can see. Right. So as opposed to the purely observing, you’re making the choice to change a variable– That’s what it expresses. And then, the way that we interpret it, the mechanism by which we take your query, and we translate it into something that we can work with, is by giving it semantics, saying that you have a model of the world, and you cut off all the incoming arrows into x, and you’re looking now in the modified, mutilated model, you ask for the probability of y. That is interpretation of doing x, because by doing things, you’ve liberated them from all influences that acted upon them earlier, and you subject them to the tyranny of your muscles.

Q11.3 (如何获得 do 的数据呢?) So you (chuckles) you remove all the questions about causality by doing them. So there is one level of questions. Answer questions about what will happen if you do things. If you do, if you drink the coffee, or if you take the aspirin. [Judea] Right. So how do we get the doing data?

(laughs) Hah. Now the question is, if you cannot run experiments, right, then we have to rely on observation and study. So first we could, [Lex]sorry to interrupt, we could run an experiment, where we do something, where we drink the coffee, and the do-operator allows you to sort of be systematic about expressing that. [Judea] To imagine how the experiment will look like even though we cannot physically and technologically conduct it. I’ll give you an example. What is the effect of blood pressure on mortality? I cannot go down into your vein and change your blood pressure. But I can ask the question, which means I can have a model of your body. I can imagine how the blood pressure change will affect your mortality. How? I go into the model, and I conduct this surgery, about the blood pressure, even though physically I cannot do it.

Q12. (干预会改变观测吗?)Let me ask the quantum mechanics question. Does the doing change the observation? Meaning, the surgery of changing the blood pressure–

No, the surgery is very delicate. [Lex] It’s very delicate. Infinitely delicate. (laughs) [Judea]Incisive and delicate, which means, do-x means I’m going to touch only x. Directly into x. So, that means that I change only things which depend on x, by virtue of x changing. But I don’t depend things which are not depend on x. Like, I wouldn’t change your sex, or your age. I just change your blood pressure, okay?

Q12.1 (不可进行的干预)[Lex] So, in the case of blood pressure, it may be difficult or impossible to construct such an experiment.

[Judea] No, but physically, yes. But hypothetically no. If we had a model, that is what the model is for. So, you conduct surgeries on the models. You take it apart, put it back. That’s the idea for model. It’s the idea of thinking counterfactually, imagining, and that idea of creativity.

[Lex] So by constructing that model you can start to infer if the blood pressure leads to mortality, which increases or decreases, whi– [Judea] I construct a model. I still cannot answer it. I have to see if I have enough information in the model that would allow me to find out the effects of intervention from an uninterventional study, from a hands-off study.

Q12.2 (因果建模需要什么?) [Lex] So what’s needed–

We need to have assumptions about who affects whom. If the graph has a certain property, the answer is “yes, you can get it from observational study.” If the graph is too mushy bushy bushy, the answer is, “no, you cannot.” Then you need to find either different kind of observation that you haven’t considered, or one experiment. [Lex] So, basically, that puts a lot of pressure on you to encode wisdom into that graph. [Judea]Correct. But you don’t have to encode more than what you know. God forbid. The economists are doing that. They call identifying assumptions. They put assumptions, even they don’t prevail in the world, they put assumptions so they can identify things.

[Lex]Yes, beautifully put. But, the problem is you don’t know what you don’t know. [Judea] You know what you don’t know, because if you don’t know, you say it’s possible that x affect the traffic tomorrow. It’s possible. You put down an arrow which says it’s possible. Every arrow in the graph says it’s possible. [Lex] So there’s not a significant cost to adding arrows, The more arrow you add, the less likely you are to identify things from purely observational data. So if the whole world is bushy, and everybody effect everybody else, the answer is-you can answer it ahead of time. I cannot answer my query from observational data. I have to go to experiments(因果连接越多越不可预测).

反事实问题¶

Q13. (反事实问题)So, you talk about machine learning as essentially learning by association, or reasoning by association, and this do-calculus is allowing for intervention. I like that word. You also talk about counterfactuals. Yeah. And trying to sort of understand the difference between counterfactuals and intervention, first of all, what is counterfactuals, and why are they useful? Why are they especially useful as opposed to just reasoning what effect actions have?

Well, counterfactual contains what we know will equal explanations. Can you give an example of what kind of– If I tell you that acting one way affects something else, I didn’t explain anything yet. But if I ask you, was it the aspirin that cure my headache, I’m asking for explanation: what cure my headache? And putting a finger on aspirin, provide explanation. It was the aspirin that was responsible for your headache going away. If you didn’t take the aspirin, you will still have a headache.

[Lex]So by saying, “If I didn’t take aspirin,”I would have a headache,” you’re thereby saying, “The aspirin is the thing”that removed the headache.” [Judea]Yes, but you have to have another point of information. I took the aspirin, and my headache is gone. It’s very important information. Now we’re reasoning backward, and I say, “Was it the aspirin?”

[Lex ]Yeah. By considering what would have happened if everything is the same, but I didn’t take aspirin. [Judea] That’s right. So we know that things took place, you know? Joe killed Schmo. And Schmo would be alive had Joe not used his gun. Okay, so that is the counterfactual. It had a confliction. It had a conflict here, or clash between observed fact -he did shoot, okay – and the hypothetical predicate, which says, had he not shot. You have a clash, a logical clash, that cannot exist together. That’s counterfactual, and that is the source of our explanation of the idea of responsibility, regret, and free will.

Q14. (反事实和物理因果)Yes, it certainly seems, that’s the highest level of reasoning, right? Counterfactual. [Judea] Yes, and physicists do it all the time. Who does it all the time? [Judea] Physicists. Physicists. In every equation of physics, you have Hooke’s law, and you put one kilogram on the spring, and the spring is one meter, and you say, “Had this weight been two kilograms,”the spring would have been twice as long.” It’s not a problem for physicists to say that. Instead with mathematics, it is in the form of an equation, equating the weight, proportionality constant, and the length of the spring. We don’t have the assymetry in the equation of physics, although every physicist thinks counterfactually. Ask high school kids, had the weight been three kilograms, what would be the length of the spring? They can answer it immediately, because they do the counterfactual processing in their mind, and then they put it into equation, algebraic equation, and they solve it. But a robot cannot do that.

Q14.1 (您如何让机器人学习这些关系?)How do you make a robot learn these relationships? Why use the word “learn?” Suppose you tell him, can you do it? Before you go learning, you have to ask yourself, suppose I give all the information. Can the robot perform a task that I ask him to perform? Can he reason and say, “No, it wasn’t the aspirin.”It was the good news we received on the phone.” [Lex] Right, because, well, unless the robot had a model, a causal model of the world. I’m sorry I have to linger on this– [Judea] But now we have to linger, and we have to say, “How do we do it?” How do we build it? [Judea]

Q14.2 (在没有专家团队四处奔波的情况下,我们如何建立因果模型)Yes. How do we build a causal model without a team of human experts running around– No, why did you go to learning right away? You are too much involved with learning. Because I like babies. Babies learn fast, and I’m trying to figure out how they do it. Good. That’s another question: How do the babies come out with the counterfactual model of the world? And babies do that. They know how to play in the crib. They know which balls hits another one, and they learn it by playful manipulation of the world. Their simple world involves all these toys and balls and chimes (laughs) but if you think about it, it’s a complex world. [Lex]We take for granted how complicated– And the kids do it by playful manipulation, plus parent guidance, peer wisdom, and heresay. They meet each other, and they say, “You shouldn’t have taken my toy.” (laughs)

Q15.(如何综合因果信息?)Right, and these multiple sources of information, they’re able to integrate. So, the challenge is about how to integrate, how to form these causal relationships from different sources of data. [Judea] Correct. So, how much causal information is required to be able to play in the crib with different objects? [Judea] I don’t know. I haven’t experimented with the crib(婴儿床). (chuckles) [Lex] Okay, not a crib– I know, it’s a very interesting– Manipulating physical objects on this very, opening the pages of a book, all the tasks, physical manipulation tasks, do you have a sense? Because my sense is the world is extremely complicated.

Extremely complicated. I agree and I don’t know how to organize it, because I’ve been spoiled by easy problems such as cancer and death, okay? (laughs) [Lex] First we have to start trying to– No, but it’s easy, easy in the sense that you have only 20 variables, and they are just variables. They are not mechanics, okay? It’s easy. You just put them on the graph and they speak to you. (laughs) [Lex] And you’re providing a methodology for letting them speak. I’m working only in the abstract. The abstract is knowledge in, knowledge out, data in between.

Q16. (如何构建因果图?)Now, can we take a leap to trying to learn, when it’s not 20 variables but 20 million variables, trying to learn causation in this world. Not learn, but somehow construct models. I mean, it seems like you would only have to be able to learn, because constructing it manually would be too difficult. Do you have ideas of–

I think it’s a matter of combining simple models from many, many sources, from many, many disciplines. And many metaphors. Metaphors are the basis of human intelligence. [Lex]Yeah, so how do you think about a metaphor in terms of its use in human intelligence? [Judea] Metaphors is an expert system. It’s mapping problem with which you are not familiar, to a problem with which you are familiar. Like I give you a great example. The Greek believed that the sky is an opaque sheer. It’s not really infinite space; it’s an opaque sheer, and the stars are holes poked in the sheer, through which you see the eternal light. It was a metaphor, why? Because they understand how you poke holes in sheers. They were not familiar with infinite space. And we are walking on a shell of a turtle, and if you get too close to the edge, you’re going to fall down to Hades, or wherever, yeah. That’s a metaphor. It’s not true. But these kind of metaphor enabled Eratosthenes to measure the radius of the Earth, because he said, “Come on.”If we are walking on a turtle shell, “then the ray of light coming to this place”will be different angle than coming to this place. “I know the distance.”I’ll measure the two angles, “and then I have the radius of the shell of the turtle.” And he did. And his measurement was very close to the measurements we have today. It was, what, 6,700 kilometers, was the Earth? That’s something that would not occur to a Babylonian astronomer, even though the Babylonian experiments were the machine learning people of the time. They fit curves, and they could predict the eclipse of the moon much more accurately than the Greek, because they fit curves. That’s a different metaphor, something that you’re familiar with, again, a turtle shell. What does it mean, if you are familiar? Familiar means that answers to certain questions are explicit. You don’t have to derive them.

[Lex]And they were made explicit because somewhere in the past you’ve constructed a model of that– [Judea]You’re familiar with, so the child is familiar with billiard balls. So the child could predict that if you let loose of one ball, the other one will bounce off. You attain that by familiarity. Familiarity is answering questions, and you store the answer explicitly. You don’t have to derive it. So this is idea for metaphor. All our life, all our intelligence, is built around metaphors, mapping from the unfamiliar to the familiar, but the marriage between the two is a tough thing, which we haven’t yet been able to algorithmatize.

[Lex]So, you think of that process of using metaphor to leap from one place to another. We can call it reasoning. Is it a kind of reasoning? [Judea] It is a reasoning by metaphor, but– Reasoning by metaphor. [Lex]Do you think of that as learning? So, learning is a popular terminology today in a narrow sense.

[Judea] It is, it is definitely. So you may not-you’re right. It’s one of the most important learning, taking something which theoretically is derivable, and store it in accessible format. I’ll give you an example: chess, okay? Finding the winning starting move in chess is hard. But there is an answer. Either there is a winning move for white, or there isn’t, or it is a draw. So, the answer to that is available through the rule of the game. But we don’t know the answer. So what does a chess master have that we don’t have? He has stored explicitly an evaluation of certain complex pattern of the board. We don’t have it, ordinary people, like me. I don’t know about you. I’m not a chess master. So for me I have to derive things that for him is explicit. He has seen it before, or he has seen the pattern before, or similar patterns before, and he generalizes, and says, “Don’t move; it’s a dangerous move.”

Q17. (我们离因果机器还有多远?)[Lex]It’s just that, not in the game of chess, but in the game of billiard balls we humans are able to initially derive very effectively and then reason by metaphor very effectively, and we make it look so easy, and it makes one wonder how hard is it to build it in a machine? In your sense, (laughs) how far away are we to be able to construct–

I don’t know. I’m not a futurist. All I can tell you is that we are making tremendous progress in the causal reasoning domain. Something that I even dare to call it a revolution, the causal revolution, because what we have achieved in the past three decades is something that dwarf everything that was derived in the entire history.

Q17.1 (机器学习如何结合因果?)[Lex]So there’s an excitement about current machine learning methodologies, and there’s really important good work you’re doing in causal inference. Where do these worlds collide, and what does that look like?

First they gotta work without collisions. (laughs) It’s got to work in harmony. The human is going to jumpstart the exercise by providing qualitative, noncommitting models of how the universe works, how reality, the domain of discourse, works. The machine is going to take over from that point of view, and derive whatever the calculus says can be derived, namely, quantitative answer to our questions. These are complex questions. I’ll give you some examples of complex questions, that boggle your mind if you think about it. You take the results of studies in diverse population, under diverse conditions, and you infer the cause effect of a new population which doesn’t even resemble any of the ones studied. You do that by do-calculus. You do that by generalizing from one study to another. See, what’s common there too? What is different? Let’s ignore the differences and pull out the commonality. And you do it over maybe 100 hospitals around the world. From that, you can get really mileage from big data. It’s not only that you have many samples; you have many sources of data(您有许多数据来源。).

时序和因果的关系¶

Q18. (时序和因果的关系)[Lex]So that’s a really powerful thing, I think, especially for medical applications. Cure cancer, right? That’s how, from data, you can cure cancer. So we’re talking about causation, which is the temporal relationships between things. [Judea]Not only temporal. It was structural and temporal. Temporal precedence by itself cannot replace causation.

[Lex]Is temporal precedence the arrow of time in physics? [Judea] Yeah, it’s important, necessary. It’s important. [Judea] Yes. [Lex]Is it? Yes, I’ve never seen a cause propagate backwards.

But if we use the word cause, but there’s relationships that are timeless. I suppose that’s still forward an arrow of time. But, are there relationships, logical relationships, that fit into the structure?

Q18.1 (do运算可以看成是一种推广的逻辑运算)[Judea] Sure. All do-calculus is logical relationships. [Lex]That doesn’t require a temporal. It has just the condition that you’re not traveling back in time. [Judea] Yes, correct. [Lex]So it’s really a generalization, a powerful generalization, of what– [Judea] Of boolean logic. [Lex] Yeah, boolean logic. [Judea] Yes. That is sort of simply put, and allows us to reason about the order of events, the source– Not about, between. But not deriving the order of events. We are given cause effect relationships. They ought to be obeying the time precedence relationship. We are given that, and now that we ask questions about other causal relationships, that could be derived from the initial ones, but were not given to us explicitly. Like the case of the firing squad I gave you in the first chapter and I ask, “What if rifleman A declined to shoot? Would the prisoner still be dead? To decline to shoot, it means that he disobeyed orders. The rule of the games were that he is an obedient marksman. That’s how you start. That’s the initial order, but now you ask question about breaking the rules. What if he decided not to pull the trigger, because became a pacifist? You and I can answer that. The other rifleman would have hit and killed him, okay? I want a machine to do that. Is it so hard to ask a machine to do that? It’s such a simple task. But they have to have a calculus for that.

Q19. (如何学习因果推理?)Yes, yeah. But the curiosity, the natural curiosity for me, is that yes, you’re absolutely correct and important, and it’s hard to believe that we haven’t done this seriously, extensively, already a long time ago. So, this is really important work, but I also want to know, maybe you can philosophize about how hard is it to learn.

Look, let’s assume learning. We want learning, okay? We want to learn. So what do we do? We put a learning machine that watches execution trials in many countries, in many (laughs) locations, okay? All the machine can learn is to see shot or not shot. Dead, not dead. A court issued an order or didn’t, okay, just the fact. For the fact, you don’t know who listens to whom. You don’t know that the condemned person listens to the bullets, that the bullets are listening to the captain, okay? All we hear is one command, two shots, dead, okay? A triple of variables: yes, no, yes, no, okay. From that you can learn who listens to whom? And you can answer the question? No.

Definitively, no. But don’t you think you can start proposing ideas for humans to review?

You want machine to learn it, all right, you want a robot. So robot is watching trials like that, 200 trials, and then he has to answer the question, what if rifleman A refrained from shooting. [Lex] Yeah. So how do we do that? (laughs) That’s exactly my point. If looking at the facts don’t give you the strings behind the facts–

Absolutely, but so you think of machine learning, as it’s currently defined, as only something that looks at the facts and tries to– [Judea] Right now they only look at the facts. Yeah, so is there a way to modify, in your sense–

[Judea] Yeah, playful manipulation Playful manipulation. Doing the interventionist kind of things. But it could be at random. For instance, the rifleman is sick that day, or he just vomits, or whatever. So, we can observe this unexpected event, which introduced noise. The noise still have to be random to be able to relate it to randomized experiments, and then you have observational studies, from which to infer the strings behind the facts. It’s doable to a certain extent. But now that we’re expert in what you can do once you have a model, we can reason back and say what kind of data you need to build a model.

强人工智能¶

Q20.(未来AI的期待)Got it. So, I know you’re not a futurist, but are you excited? Have you, when you look back at your life, longed for the idea of creating a human level intelligence– Well, yeah, I’m driven by that. All my life I’m driven just by one thing. (laughs) But I go slowly. I go from what I know, to the next step incrementally. So, without imagining what the end goal looks like, do you imagine–

The end goal is going to be a machine that can answer sophisticated questions: counterfactuals, regret, compassion, responsibility, and free will.

So what is a good test? Is a Turing test a reasonable test? A Turing test of free will doesn’t exist yet. There’s not– [Lex] How would you test free will? That’s a– So far we know only one thing, merely (laughs) if robots can communicate, with reward and punishment among themselves, and hitting each other on the wrists, and say “You shouldn’t have done that.” Playing better soccer because they can do that.

[Lex] What do you mean, because they can do that? Because they can communicate among themselves. [Lex] Because of the communication, they can do the soccer.

Because they communicate like us, rewards and punishment, yes, you didn’t pass the ball the right time, and so forth; therefore you’re going to sit on the bench for the next two, if they start communicating like that, the question is, will they play better soccer? As opposed to what? As opposed to what they do now? Without this ability to reason about reward and punishment. Responsibility. And counterfactuals. So far, I can only think about communication. Communication, and not necessarily in natural language, but just communication. Just communication, and that’s important to have a quick and effective means of communicating knowledge. If the coach tells you you should have passed the ball, ping, he conveys so much knowledge to you as opposed to what? Go down and change your software, right. That’s the alternative. But the coach doesn’t know your software. So how can a coach tell you you should have passed the ball? But, our language is very effective: you should have passed the ball. You know your software. You tweak the right module, okay, and next time you don’t do it.

Q21. (具备道德的机器)Now that’s for playing soccer, where the rules are well defined. No, no, no, they’re not well defined. When you should pass the ball– Is not well defined. No, it’s very noisy. Yes, you have to do it under pressure (laughs) It’s art. But in terms of aligning values between computers and humans, do you think this cause and effect type of thinking is important to align the values, morals, ethics under which machines make decisions. Is the cause effect where the two can come together?

Cause effect is necessary component to build an ethical machine, because the machine has to empathize, to understand what’s good for you, to build a model of you, as a recipient. We should be very much– What is compassion? The imagine that you suffer pain as much as me. [Lex] As much as me. I do have already a model of myself, right? So it’s very easy for me to map you to mine. I don’t have to rebuild a model. It’s much easier to say, Okey, therefore, I will not hit you, okey?

And the machine has to imagine, has to try to fake to be human , essentially so you can imagine that you’re like me, right?

Whoa, whoa, whoa, who is me. That’s further; that’s consciousness. They have a model of yourself. Where do you get this model? You look at yourself as if you are part of the environment. If you build a model of yourself versus the environment, then you can say, “I need to have a model of myself.”I have abilities; I have desires, and so forth,” okay? I have a blueprint of myself, though, not a full detail, though, because I cannot get the whole thing problem, but I have a blueprint. So at that level of a blueprint, I can modify things. I can look at myself in the mirror and say, “Hmm, if I tweak this model,”I’m going to perform differently.” That is what we mean by free will. Lex And consciousness. What do you think is consciousness? Is it simply self awareness, including yourself into the model of the world? That’s right. Some people tell me no, this is only part of consciousness, and then they start telling what they really mean by consciousness, and I lose them. For me, consciousness is having a blueprint of your software.

Q22. (对 Causal AI 的担忧?)Do you have concerns about the future of AI, all the different trajectories of all the research? [Judea] Yes. Where’s your hope where the movement heads? Where are your concerns?

I’m concerned, because I know we are building a new species that has the capability of exceeding us, exceeding our capabilities, and can breed itself and take over the world, absolutely. It’s a new species; it is uncontrolled. We don’t know the degree to which we control it. We don’t even understand what it means, to be able to control this new species. So, I’m concerned. I don’t have anything to add to that because it’s such a gray area, that unknown. It never happened in history. The only time it happened in history, was evolution with the human being. [Lex] Right. And it was very successful, was it? (laughs) Some people say it was a great success.

For us, it was, but a few people along the way, yeah, a few creatures along the way would not agree. So, just because it’s such a gray area, there’s nothing else to say. [Judea] We have a sample of one. Sample of one. [Judea] It’s us. Some people would look at you, and say, yeah but we were looking to you to help us make sure that sample two works out okay. Correct. Actually we have more than a sample of one. We have theories. And that’s good; we don’t need to be statisticians. So, sample of one doesn’t mean poverty of knowledge. It’s not. Sample of one plus theory, conjecture or theory, of what could happen, that we do have. But I really feel helpless in contributing to this argument, because I know so little, and my imagination is limited, and I know how much I don’t know, but I’m concerned.

Pearl 个人经历¶

Q23. (Pearl 个人经历)You were born and raised in Israel. [Judea] Born and raised in Israel, yes. And later served in the Israel military defense forces. In the Israel Defense Force. What did you learn from that experience? From that experience? (laughs) [Lex] There’s a kibbutz in there as well. Yes, because I was in a NAHAL, which is a combination of agricultural work and military service. I was an idealist. I wanted to be a member of the kibbutz throughout my life, and to live a communal life, and so I prepared myself for that. Slowly, slowly I wanted a greater challenge.

So, that’s a far world away, both in t– But I learned from that, what a kidada. It was a miracle It was a miracle that I served in the 1950s. I don’t know how we survived. The country was under austerity. It tripled its population from 600,000 to 1.8 million when I finished college. No one went hungry. Austerity, yes. When you wanted to make an omelet in a restaurant, you had to bring your own egg. And the imprisoned people from bringing the food from the farming area, from the villages, to the city. But no one went hungry, and I always add to that: higher education did not suffer any budget cuts. They still invested in me, in my wife, in our generation. To get the best education that they could. So I’m really grateful for the progenity, and I’m trying to pay back now. It’s a miracle that we survived the war of 1948. They were so close to a second genocide. It was all planned. (laughs) But we survived it by a miracle, and then the second miracle that not many people talk about, the next phase, how no one went hungry, and the country managed to triple its population. You know what it means to triple population? Imagine United States going from, what, 350 million to (laugh) unbelievable.

This is a really tense part of the world. It’s a complicated part of the world, Israel and all around. Religion is at the core of that complexity, or one of the components– Religion is a strong motivating course for many, many people in the Middle East, yes. In your view, looking back, is religion good for society? That’s a good question for robotics, you know? [Lex] There’s echoes of that question. Should we equip robot with religious beliefs? Suppose we find out, or we agree, that religion is a good thing, it will keep you in line. Should we give the robot the metaphor of a god? As a metaphor, the robot will get it without us, also. Why? Because a robot will reason by metaphor. And what is the most primitive metaphor a child grows with? Mother smile, father teaching, father image and mother image, that’s God. So, whether you want it or not, (laughs) the robot will, assuming the robot is going to have a mother and a father. It may only have program, though, which doesn’t supply warmth and discipline. Well, discipline it does. So, the robot will have a model of the trainer. And everything that happens in the world, cosmology and so on, is going to be mapped into the programmer. (laughs) That’s God.

Q24. (Pearl儿子的故事?)The thing that represents the origin for everything for that robot. [Judea] It’s the most primitive relationship. So it’s going to arrive there by metaphor. And so the question is if overall that metaphor has served us well, as humans. I really don’t know. I think it did, but as long as you keep in mind it is only a metaphor. (laughs) So, if you think we can, can we talk about your son?

[Judea] Yes, yes. Can you tell his story? [Judea] His story, well– Daniel. His story is known. He was abducted in Pakistan, by al-Quaeda driven sect, and under various pretenses. I don’t even pay attention to what the pretense was. Originally they wanted to have United States deliver some promised airplanes, I– It was all made up, you know, all these demands were bogus. I don’t know, really, but eventually he was executed, in front of a camera.

At the core of that is hate and intolerance. At the core, yes, absolutely, yes. We don’t really appreciate the depth of the hate with which billions of peoples are educated. We don’t understand it. I just listened recently to what they teach you in Mogadishu. (laughs) When the war does stop, and the tap, we knew exactly who did it. The Jews. [Lex] The Jews. We didn’t know how, but we knew who did it. We don’t appreciate what it means to us. The depth is unbelievable. Do you think all of us are capable of evil, and the education, the indoctrination, is really what creates evil? Absolutely we are capable of evil. If you are indoctrinated sufficiently long, and in depth, we are capable of ISIS, we are capable of Nazism, yes, we are. But the question is whether we, after we have gone through some Western education, and we learn that everything is really relative, that there is no absolute God. He’s only a belief in God. Whether we are capable, now, of being transformed, under certain circumstances, to become brutal. [Lex] Yeah. That is a qu-I’m worried about it, because some people say yes, given the right circumstances, given the bad economical crisis. You are capable of doing it, too, and that worries me. I want to believe that I’m not capable.

Seven years after Daniel’s death, you wrote an article at the Wall Street Journal titled “Daniel Pearl and the Normalization of Evil.” [Judea] Yes. What was your message back then, and how did it change today, over the years? I lost. [Lex] What was the message? The message was that we are not treating terrorism as a taboo. We are treating it as a bargaining device that is accepted. People have grievance, and they go and bomb restaurants. It’s normal. Look, you’re even not surprised when I tell you that. Twenty years ago you say, “What? For grievance you go”and blow a restaurant?” Today it’s become normalized. The banalisation of evil. And we have created that to ourselves, by normalizing it, by making it part of political life. It’s a political debate. Every terrorist yesterday becomes a freedom fighter today and tomorrow is become a terrorist again. It’s switchable.

[Lex] And so, we should call out evil when there’s evil. If we don’t want to be part of it. [Lex] Become it. Yeah, if we want to separate good from evil, that’s one of the first things, that, in the Garden of Eden, remember? The first thing that God tells them was “Hey, you want some knowledge?”Here is the tree of good and evil.”

So this evil touched your life personally. Does your heart have anger, sadness, or is it hope? Look, I see some beautiful people coming from Pakistan. I see beautiful people everywhere. But I see horrible propagation of evil in this country, too. It shows you how populistic slogans can catch the mind of the best intellectuals. Today is Father’s Day. [Judea] I didn’t know that. Yeah, what’s a fond memory you have of Daniel? Oh, many good memories remains. He was my mentor. He had a sense of balance that I didn’t have. (laughs) [Lex] Yeah. He saw the beauty in every person. He was not as emotional as I am, more looking things in perspective. He really liked every person. He really grew up with the idea that a foreigner is a reason for curiosity, not for fear. This one time we went in Berkeley, and a homeless came out from some dark alley and said, “Hey man, can you spare a dime?” (Judea gasps) I retreated back, you know, two feet back, and Danny just hugged him and say “Here’s a dime.”Enjoy yourself. Maybe you want some money to take a bus “or whatever.” Where did he get it? Not from me.

Q25. (对他人的建议?) Do you have advice for young minds today dreaming about creating, as you have dreamt, creating intelligent systems? What is the best way to arrive at new break-through ideas and carry them through the fire of criticism and past conventional ideas?

Ask your questions. Really, your questions are never dumb. And solve them your own way. (laughs) And don’t take “no” for an answer. If they’re really dumb, you’ll find out quickly, by trial and error, to see that they’re not leading any place. But follow them, and try to understand things your way. That is my advice. I don’t know if it’s going to help anyone. [Lex] No, that’s brilliantly put. There’s a lot of inertia in science, in academia. It is slowing down science. Yeah, those two words, “your way,” that’s a powerful thing. It’s against inertia, potentially. [Judea] Against your professor. (Lex laughs) I wrote “The Book of Why” in order to democratize common sense. [Lex] Yeah. (laughs) In order to instill rebellious spirits in students, so they wouldn’t wait until the professor gets things right. (both laugh)

Q26. (墓志铭怎么写?)[Lex] So you wrote the manifesto of the rebellion against the professor. (laughs) [Judea] Against the professor, yes. So looking back at your life of research, what ideas do you hope ripple through the next many decades? What do you hope your legacy will be?

I already have a tombstone carved. (both laugh) Oh, boy. The fundamental law of counterfactuals. That’s what it-it’s a simple equation. Put a counterfactual in terms of a model surgery. That’s it, because everything follows from there. If you get that, all the rest. I can die in peace, and my student can derive all my knowledge by mathematical means. The rest follows.

Thank you so much for talking today. I really appreciate it. My thank you for being so attentive and instigating. (both laugh) We did it. We did it. [Lex] The coffee helped. Thanks for listening to this conversation with Judea Pearl.

2019 AAAI WHY 报告¶

slide 下载见:https://why19.causalai.net/

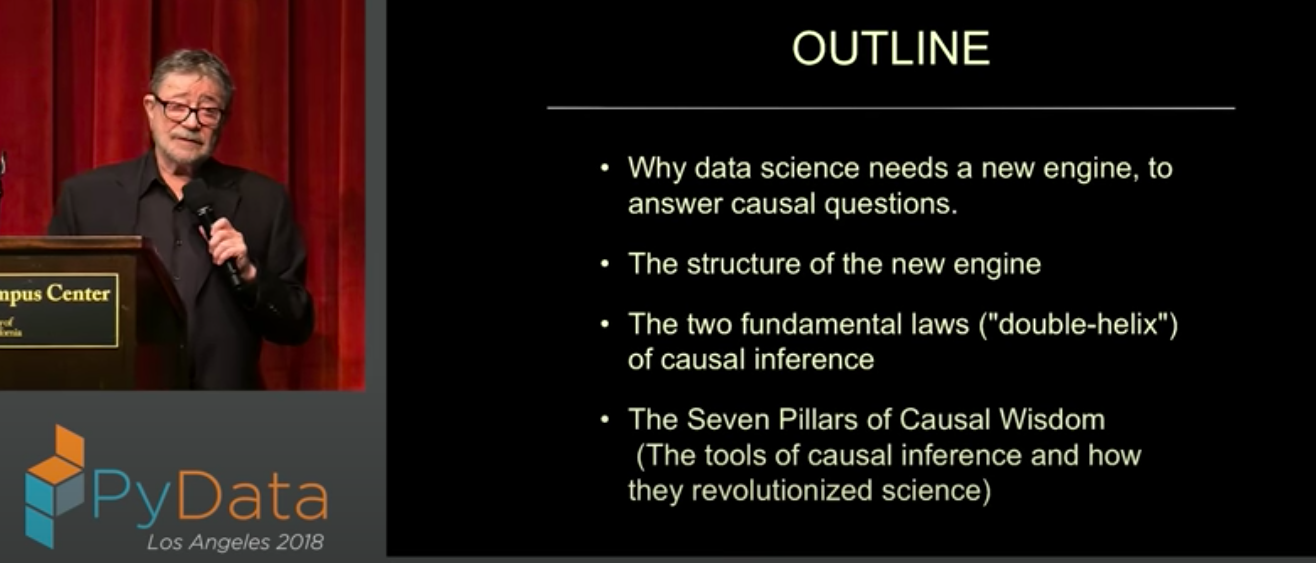

2018 Keynote talk at PyData¶

只能找到类似 slide 下载见:https://why19.causalai.net/

Outline¶

The two fundemental laws of causal inference:

how counterfactuals and probabilities of counterfactuals are deduced from a given SCM and

how features of the observed data are shaped by the graphical structure of a SCM.

(主持人介绍 Judea Pearl。)hello, thank you everyone for coming in today. it’s wonderful to have you all with us and this is the last keynote of the conference. this is my pleasure to introduce you to dr. Julia pearl, he’s a Chancellor’s emeritus professor at UCLA, and the winner of 2011 Turing Award, also considered the Nobel Prize of computing. he’s best known for championing the probabilistic approach to artificial intelligence and the development of Bayesian networks, so I’m handing the mic now to Julia, give him a huge round of applause.

(数据科学需要一种新的引擎来回答因果问题。)Thank you, thank you can you hear me, I’ll try to be as concise as possible and convey to you the essence of my talk here in the very first slide. I have a proverb which I composed in the car service coming here. I think that was summarize it very clearly, my message is very simple “it is the data is a window to reality and data science is a glass that enables us to look through the window, it is not a mirror through which data looks at itself under makeup that is the essence of things, which means the data science is a two-body science connects data in reality, interpret reality in in view of the data.” Well, you can say we all know that, so let’s go and see what has been done with data science thus far, and my outline will be one why data science needs a new engine to answer causal questions.

(我们天生就有因果思维)I call it a new engine because for us computer scientist a piece of software is an engine, and I call it a new engine because it is a new organ in our mind, one that has its own logic its own unique features, the one that we haven’t even been aware that we have it, like a guy finds out one day that he has or she has liver we can live our life without knowing that we have a liver. But once we discover that the liver is there, and its function then it’s the duty of the physician or the physiologist understand the function and to be able to repair it when it goes wrong.

(因果推理的基本规律得出的SCM)So I’m gonna I’m gonna talk about the structure of this new engine. I don’t want to keep on saying liver and the two fundamental laws of causal inference. You can look at it as a double helix, because once you see and you acquire and you commit yourself to these two fundamental laws, you don’t have to listen to any lectures like this one you can do everything yourself it derive able mathematically. It’s nice thing about science sometimes you get the key and the rest is derive a bull like the axioms of geometry, and then I’m gonna spend the second part of my talk convincing you that it is not just a mathematical gimmick but it has application。

(因果推理七个支柱)I will convey the applications through a list of seven pillars. These are tools that you will be able to do once you acquire the double helix and these are tools that go beyond data science tool that enables you to answer questions that ordinary statistics cannot, answer it ordinary machine learning cannot answer today. They will tomorrow and it has revolutionized data intensive sciences from epidemiology to economics to social science.

[ ]: